Release66:TCE

From NWChem

Tensor Contraction Engine Module: CI, MBPT, and CC

Overview

The Tensor Contraction Engine (TCE) Module of NWChem implements a variety of approximations that converge at the exact solutions of Schrödinger equation. They include configuration interaction theory through singles, doubles, triples, and quadruples substitutions, coupled-cluster theory through connected singles, doubles, triples, and quadruples substitutions, and many-body perturbation theory through fourth order in its tensor formulation. Not only optimized parallel programs of some of these high-end correlation theories are new, but also the way in which they have been developed is unique. The working equations of all of these methods have been derived completely automatically by a symbolic manipulation program called a Tensor Contraction Engine (TCE), and the optimized parallel programs have also been computer-generated by the same program, which were interfaced to NWChem. The development of the TCE program and this portion of the NWChem program has been financially supported by the United States Department of Energy, Office of Science, Office of Basic Energy Science, through the SciDAC program.

The capabilities of the module include:

- Restricted Hartree-Fock, unrestricted Hartree-Fock, and restricted open-shell Hartree-Fock references,

- Restricted KS DFT and unrestricted KS DFT references,

- Unrestricted configuration interaction theory (CISD, CISDT, and CISDTQ),

- Unrestricted coupled-cluster theory (LCCD, CCD, LCCSD, CCSD, QCISD, CCSDT, CCSDTQ),

- Unrestricted iterative many-body perturbation theory [MBPT(2), MBPT(3), MBPT(4)] in its tensor formulation,

- Unrestricted coupled-cluster singles and doubles with perturbative connected triples {CCSD(T), CCSD[T]},

- Unrestricted equation-of-motion coupled-cluster theory (EOM-CCSD, EOM-CCSDT, EOM-CCSDTQ) for excitation energies, transition moments and oscillator strengths, and excited-state dipole moments,

- Unrestricted coupled-cluster theory (CCSD, CCSDT, CCSDTQ) for dipole moments.

- Several variants of active-space CCSDt and EOMCCSDt methods that employ limited set of triply excited cluster amplitudes defined by active orbitals.

- Ground-state non-iterative CC approaches that account for the effect of triply and/or quadruply excited connected clusters: the perturbative approaches based on the similarity transformed Hamiltonian: CCSD(2), CCSD(2)T, CCSDT(2)Q, the completely and locally renormalized methods: CR-CCSD(T), LR-CCSD(T), LR-CCSD(TQ)-1.

- Excited-state non-iterative corrections due to triples to the EOMCCSD excitation energies: the completely renormalized EOMCCSD(T) method (CR-EOMCCSD(T)).

- Dynamic dipole polarizabilities at the CCSD and CCSDT levels using the linear response formalism.

- Ground- and excited- states the iterative second-order model CC2.

- Dynamic dipole polarizabilities at the CCSDTQ level using the linear response formalism.

- State-specific Multireference Coupled Cluster methods (MRCC) (Brillouin-Wigner (BW-MRCC) and Mukherjee (Mk-MRCC) approaches).

- Electron affinity/Ionization potential EOMCCSD formulations (EA/IP-EOMCC; available for RHF reference only).

The distributed binary executables do not contain CCSDTQ and its derivative methods, owing to their large volume. The source code includes them, so a user can reinstate them by setenv CCSDTQ yes and recompile TCE module. The following optimizations have been used in the module:

- Spin symmetry (spin integration is performed wherever possible within the unrestricted framework, making the present unrestricted program optimal for an open-shell system. The spin adaption was not performed, although in a restricted calculation for a closed-shell system, certain spin blocks of integrals and amplitudes are further omitted by symmetry, and consequently, the present unrestricted CCSD requires only twice as many operations as a spin-adapted restricted CCSD for a closed-shell system),

- Point-group symmetry,

- Index permutation symmetry,

- Runtime orbital range tiling for memory management,

- Dynamic load balancing (local index sort and matrix multiplications) parallelism,

- Multiple parallel I/O schemes including fully in-core algorithm using Global Arrays,

- Frozen core and virtual approximation.

- DIIS extrapolation and Jacobi update of excitation amplitudes

- Additional algorithms for the 2-e integral transformation, including efficient and scalable spin-free out-of-core N5 algorithms.

- Hybrid I/O schemes for both spin-orbital and spin-free calculations which eliminate the memory bottleneck of the 2-e integrals in favor of disk storage. Calculations with nearly 400 basis functions at the CCSD(T) can be performed on workstation using this method.

- Parallel check-pointing and restart for ground-state (including property) calculations at the CCSD, CCSDT and CCSDTQ levels of theory.

Performance of CI, MBPT, and CC methods

For reviews or tutorials of these highly-accurate correlation methods, the user is referred to:

- Trygve Helgaker, Poul Jorgensen and Jeppe Olsen, Molecular Electronic-Structure Theory.

- A. Szabo and N. S. Ostlund, Modern Quantum Chemistry: Introduction to Advanced Electronic Structure Theory.

- B. O. Roos (editor), Lecture Notes in Quantum Chemistry.

For background on development of the symbolic algebra tools which help create the code used in this model see:

- S. Hirata, J. Phys. Chem. A 107, 9887 (2003).

- S. Hirata, J. Chem. Phys. 121, 51 (2004).

- S. Hirata, Theor. Chem. Acc. 116, 2 (2006).

For details of particular CC implementations, see:

- S. Hirata, P.-D. Fan, A.A. Auer, M. Nooijen, P. Piecuch, J. Chem. Phys. 121, 12197 (2004).

- K. Kowalski, S. Hirata, M. Wloch, P. Piecuch, T.L. Windus, J. Chem. Phys. 123, 074319 (2005).

- K. Kowalski, P. Piecuch, J. Chem. Phys. 115, 643 (2001).

- K. Kowalski, P. Piecuch, Chem. Phys. Lett. 347, 237 (2001).

- P. Piecuch, S.A. Kucharski, and R.J. Bartlett, J. Chem. Phys. 110, 6103 (1999).

- P. Piecuch, N. Oliphant, and L. Adamowicz, J. Chem. Phys. 99, 1875 (1993).

- N. Oliphant and L. Adamowicz, Int. Rev. Phys. Chem. 12, 339 (1993).

- P. Piecuch, N. Oliphant, and L. Adamowicz, J. Chem. Phys. 99, 1875 (1993).

- K. Kowalski, P. Piecuch, J. Chem. Phys. 120, 1715 (2004).

- K. Kowalski, J. Chem. Phys. 123, 014102 (2005).

- P. Piecuch, K. Kowalski, I.S.O. Pimienta, M.J. McGuire, Int. Rev. Phys. Chem. 21, 527 (2002).

- K. Kowalski, P. Piecuch, J. Chem. Phys. 122, 074107 (2005)

- K. Kowalski, W. de Jong, J. Mol. Struct.: THEOCHEM, 768, 45 (2006).

- O. Christiansen, H. Koch, P. Jørgensen, Chem. Phys. Lett. 243, 409 (1995).

- K. Kowalski, J. Chem. Phys. 125, 124101 (2006).

- K. Kowalski, S. Krishnamoorthy, O. Villa, J.R. Hammond, N. Govind, J. Chem. Phys. 132, 154103 (2010).

- J.R. Hammond, K. Kowalski, W.A. de Jong, J. Chem. Phys. 127, 144105 (2007).

- J.R. Hammond, W.A. de Jong, K. Kowalski, J. Chem. Phys. 128, 224102 (2008).

- J.R. Hammond, K. Kowalski, J. Chem. Phys. 130, 194108 (2009).

- W. Ma. S. Krishnamoorthy, O. Villa, J. Chem. Theory Comput. 7, 1316 (2011).

- J.Brabec, J. Pittner, H.J.J. van Dam, E. Apra, K. Kowalski, J. Chem. Theory Comput. 8, 487 (2012).

- K. Bhaskaran-Nair, J. Brabec, E. Apra, H.J.J. van Dam. J. Pittner, K. Kowalski, J. Chem. Phys. 137, 094112 (2012).

- K. Bhaskaran-Nair, K. Kowalski, J. Moreno, M. Jarrell, W.A. Shelton, J. Chem. Phys. 141, 074304 (2014).

Algorithms of CI, MBPT, and CC methods

Spin, spatial, and index permutation symmetry

The TCE thoroughly analyzes the working equation of many-electron theory models and automatically generates a program that takes full advantage of these symmetries at the same time. To do so, the TCE first recognizes the index permutation symmetries among the working equations, and perform strength reduction and factorization by carefully monitoring the index permutation symmetries of intermediate tensors. Accordingly, every input and output tensor (such as integrals, excitation amplitudes, residuals) has just two independent but strictly ordered index strings, and each intermediate tensor has just four independent but strictly ordered index strings. The operation cost and storage size of tensor contraction is minimized by using the index range restriction arising from these index permutation symmetries and also spin and spatial symmetry integration.

Runtime orbital range tiling

To maintain the peak local memory usage at a manageable level, in the beginning of the calculation, the orbitals are rearranged into tiles (blocks) that contains orbitals with the same spin and spatial symmetries. So the tensor contractions in these methods are carried out at the tile level; the spin, spatial, and index permutation symmetry is employed to reduce the operation and storage cost at the tile level also. The so-called tile-structure of all tensors used in CC equations is also the key-factor determining the parallel structure of the TCE CC codes . The tiling scheme corresponds to partitioning of the spin-orbital domain into smaller subsets containing the spin-orbitals of the same spin and spatial symmetries (the so-called tiles). This partitioning of the spin-orbital domain entails the blocking of all tensors corresponding to one- and two-electron integrals, cluster amplitudes, and all recursive intermediates, into smaller blocks of the size defined by the size of the tile (or tilesize for short). Since the parallel scheme used in all TCE generated codes is deeply rooted in dynamic load balancing techniques, the tile-structure defines the granularity of the work to be distributed. The size of tiles (tilesize) defines also the local memory requirements in all TCE derived CC implementations. For CI/CC/EOMCC/LR-CC models based on the sinlges and doubles models (CISD,CCSD,EOMCCSD,LR-CCSD) the peak local memory requirement is proportional to the (tilesize)4. In approaches accounting for triples, either in iterative or non-iterative fashion, the local memory usage is proportional to (tilesize)6. This means that in the CCSD(T), CCSDt, CCSDT, CR-EOMCCSD(T), EOMCCSDt, EOMCCSDT, LR-CCSDT caluclations the tilesize cannot be defined too large.

Dynamic load balancing parallelism

In a parallel execution, dynamic load balancing of tile-level local tensor index sorting and local tensor contraction (matrix multiplication) will be invoked.

Parallel I/O schemes

Each process is assigned a local tensor index sorting and tensor contraction dynamically. It must first retrieve the tiles of input tensors, and perform these local operations, and accumulate the output tensors to the storage. We have developed a uniform interface for these I/O operations to either (1) a global file on a global file system, (2) a global memory on a global or distributed memory system, and (3) semi-replicated files on a distributed file systems. Some of these operations depend on the ParSoft library.

Input syntax

The keyword to invoke the many-electron theories in the module is TCE. To perform a single-point energy calculation, include

TASK TCE ENERGY

in the input file, which may be preceeded by the TCE input block that details the calculations:

TCE

[(DFT||HF||SCF) default HF=SCF]

[FREEZE [[core] (atomic || <integer nfzc default 0>)] \

[virtual <integer nfzv default 0>]]

[(LCCD||CCD||CCSD||CC2||LR-CCSD||LCCSD||CCSDT||CCSDTA||CCSDTQ|| \

CCSD(T)||CCSD[T]||CCSD(2)_T||CCSD(2)||CCSDT(2)_Q|| \

CR-CCSD[T]||CR-CCSD(T)|| \

LR-CCSD(T)||LR-CCSD(TQ)-1||CREOMSD(T)|| \

QCISD||CISD||CISDT||CISDTQ|| \

MBPT2||MBPT3||MBPT4||MP2||MP3||MP4) default CCSD]

[THRESH <double thresh default 1e-6>]

[MAXITER <integer maxiter default 100>]

[PRINT (none||low||medium||high||debug)

<string list_of_names ...>]

[IO (fortran||eaf||ga||sf||replicated||dra||ga_eaf) default ga]

[DIIS <integer diis default 5>]

[LSHIFT <double lshift default is 0.0d0>]

[NROOTS <integer nroots default 0>]

[TARGET <integer target default 1>]

[TARGETSYM <character targetsym default 'none'>]

[SYMMETRY]

[2EORB]

[2EMET <integer fast2e default 1>]

[T3A_LVL]

[ACTIVE_OA]

[ACTIVE_OB]

[ACTIVE_VA]

[ACTIVE_VB]

[DIPOLE]

[TILESIZE <no default (automatically adjusted)>]

[(NO)FOCK <logical recompf default .true.>]

[FRAGMENT <default -1 (off)>]

END

Also supported are energy gradient calculation, geometry optimization, and vibrational frequency (or hessian) calculation, on the basis of numerical differentiation. To perform these calculations, use

TASK TCE GRADIENT

or

TASK TCE OPTIMIZE

or

TASK TCE FREQUENCIES

The user may also specify the parameters of reference wave function calculation in a separate block for either HF (SCF) or DFT, depending on the first keyword in the above syntax.

Since every keyword except the model has a default value, a minimal input file will be

GEOMETRY Be 0.0 0.0 0.0 END BASIS Be library cc-pVDZ END TCE ccsd END TASK TCE ENERGY

which performs a CCSD/cc-pVDZ calculation of the Be atom in its singlet ground state with a spin-restricted HF reference.

New implementions of the iterative CCSD and EOMCCSD methods based on the improved task scheduling can be enable by the "set tce:nts T" command as in the following example:

geometry/basis set specifications tce freeze atomic creomccsd(t) tilesize 20 2eorb 2emet 13 eomsol 2 end set tce:nts T

task tce energy

New task scheduling should reduce time to solutions and provide better parallel perfromance especially in large CCSD/EOMCCSD runs.

Keywords of TCE input block

HF, SCF, or DFT -- the reference wave function

This keyword tells the module which of the HF (SCF) or DFT module is going to be used for the calculation of a reference wave function. The keyword HF and SCF are one and the same keyword internally, and are default. When these are used, the details of the HF (SCF) calculation can be specified in the SCF input block, whereas if DFT is chosen, DFT input block may be provided.

For instance, RHF-RCCSDT calculation (R standing for spin-restricted) can be performed with the following input blocks:

SCF SINGLET RHF END TCE SCF CCSDT END TASK TCE ENERGY

This calculation (and any correlation calculation in the TCE module using a RHF or RDFT reference for a closed-shell system) skips the storage and computation of all β spin blocks of integrals and excitation amplitudes. ROHF-UCCSDT (U standing for spin-unrestricted) for an open-shell doublet system can be requested by

SCF DOUBLET ROHF END TCE SCF CCSDT END TASK TCE ENERGY

and likewise, UHF-UCCSDT for an open-shell doublet system can be specified with

SCF DOUBLET UHF END TCE SCF CCSDT END TASK TCE ENERGY

The operation and storage costs of the last two calculations are identical. To use the KS DFT reference wave function for a UCCSD calculation of an open-shell doublet system,

DFT ODFT MULT 2 END TCE DFT CCSD END TASK TCE ENERGY

Note that the default model of the DFT module is LDA.

CCSD,CCSDT,CCSDTQ,CISD,CISDT,CISDTQ, MBPT2,MBPT3,MBPT4, etc. -- the correlation models

These keywords stand for the following models:

- LCCD: linearized coupled-cluster doubles,

- CCD: coupled-cluster doubles,

- LCCSD: linearized coupled-cluster singles & doubles,

- CCSD: coupled-cluster singles & doubles (also EOM-CCSD),

- CCSD_ACT: coupled-cluster singles & active doubles (also active-space EOMCCSD),

- LR-CCSD: locally renormalized EOMCCSD method.

- EACCSD: Electron affinity EOMCCSD method.

- IPCCSD: Ionization potential EOMCCSD method.

- CC2: second-order approximate coupled cluster with singles and doubles model

- CCSDT: coupled-cluster singles, doubles, & triples (also EOM-CCSDT),

- CCSDTA: coupled-cluster singles, doubles, & active triples (also EOM-CCSDT). Three variants of the active-space CCSDt and EOMCCSDt approaches can be selected based on various definitions of triply excited clusters: (1) version I (keyword T3A_LVL 1) uses the largest set of triply excited amplitudes defined by at least one occupied and one unoccupied active spinorbital labels. (2) Version II (keyword T3A_LVL 2) uses triply excited amplitudes that carry at least two occupied and unoccupied active spinorbital labels. (3) Version III (keyword T3A_LVL 3) uses triply excited amplitudes that are defined by active indices only. Each version requires defining relevant set of occupied active α and β spinorbitals (ACTIVE_OA and ACTIVE_OB) as well as active unoccupied α and β spinorbitals (ACTIVE_VA and ACTIVE_VB).

- CCSDTQ: coupled-cluster singles, doubles, triples, & quadruples (also EOM-CCSDTQ),

- CCSD(T): CCSD and perturbative connected triples,

- CCSD[T]: CCSD and perturbative connected triples,

- CR-CCSD[T]: completely renormalized CCSD[T] method,

- CR-CCSD(T): completely renormalized CCSD(T) method,

- CCSD(2)_T: CCSD and perturbative CCSD(T)T correction,

- CCSD(2)_TQ: CCSD and perturbative CCSD(2) correction,

- CCSDT(2)_Q: CCSDT and perturbative CCSDT(2)$_Q$ correction.

- LR-CCSD(T): CCSD and perturbative locally renormalized CCSD(T) correction,

- LR-CCSD(TQ)-1: CCSD and perturbative locally renormalized CCSD(TQ) (LR-CCSD(TQ)-1) correction,

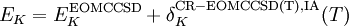

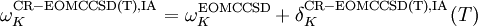

- CREOMSD(T): EOMCCSD energies and completely renormalized EOMCCSD(T)(IA) correction. In this option NWCHEM prints two components: (1) total energy of the K-th state

and (2) the so-called δ-corrected EOMCCSD excitation energy

and (2) the so-called δ-corrected EOMCCSD excitation energy  .

.

- CREOM(T)AC: active-space CR-EOMCCSD(T) approach,

- QCISD: quadratic configuration interaction singles & doubles,

- CISD: configuration interaction singles & doubles,

- CISDT: configuration interaction singles, doubles, & triples,

- CISDTQ: configuration interaction singles, doubles, triples, & quadruples,

- MBPT2=MP2: iterative tensor second-order many-body or Møller-Plesset perturbation theory,

- MBPT3=MP3: iterative tensor third-order many-body or Møller-Plesset perturbation theory,

- MBPT4=MP4: iterative tensor fourth-order many-body or Møller-Plesset perturbation theory,

All of these models are based on spin-orbital expressions of the amplitude and energy equations, and designed primarily for spin-unrestricted reference wave functions. However, for a restricted reference wave function of a closed-shell system, some further reduction of operation and storage cost will be made. Within the unrestricted framework, all these methods take full advantage of spin, spatial, and index permutation symmetries to save operation and storage costs at every stage of the calculation. Consequently, these computer-generated programs will perform significantly faster than, for instance, a hand-written spin-adapted CCSD program in NWChem, although the nominal operation cost for a spin-adapted CCSD is just one half of that for spin-unrestricted CCSD (in spin-unrestricted CCSD there are three independent sets of excitation amplitudes, whereas in spin-adapted CCSD there is only one set, so the nominal operation cost for the latter is one third of that of the former. For a restricted reference wave function of a closed-shell system, all β spin block of the excitation amplitudes and integrals can be trivially mapped to the all α spin block, reducing the ratio to one half).

While the MBPT (MP) models implemented in the TCE module give identical correlation energies as conventional implementation for a canonical HF reference of a closed-shell system, the former are intrinsically more general and theoretically robust for other less standard reference wave functions and open-shell systems. This is because the zeroth order of Hamiltonian is chosen to be the full Fock operatior (not just the diagonal part), and no further approximation was invoked. So unlike the conventional implementation where the Fock matrix is assumed to be diagonal and a correlation energy is evaluated in a single analytical formula that involves orbital energies (or diagonal Fock matrix elements), the present tensor MBPT requires the iterative solution of amplitude equations and subsequent energy evaluation and is generally more expensive than the former. For example, the operation cost of many conventional implementation of MBPT(2) scales as the fourth power of the system size, but the cost of the present tensor MBPT(2) scales as the fifth power of the system size, as the latter permits non-canonical HF reference and the former does not (to reinstate the non-canonical HF reference in the former makes it also scale as the fifth power of the system size).

State-Specific Multireference Coupled Cluster methods (MRCC)

Several State-Specific MRCC methods have been implemented in 6.3 release of nwchem. These include:

- Iterative Brillouin-Wigner MRCC method employing single and double excitations (BW-MRCCSD)

- Iterative Mukherjee MRCC method employing single and double excitations (Mk-MRCCSD)

- Non-iterative methods accounting for triple excitations in MRCC formalisms: BW-MRCCSD(T) and Mk-MRCCSD(T)

The current implementation can be used in studies of systems composed of an even number of correlated electrons (this limitation will be removed in the next release). This includes typical examples of diradical, open-shell singlets, and bond-forming/breaking processes where the corresponding wavefunctions have strong quasidegenerate character.

To enable the compilation of the MRCC codes one has to set the following variable before the compilation of NWChem

export MRCC_METHODS=y

To run MRCC calculations the user has to define two groups in the input file. First, the TCE group and secondly the MRCCDATA group. In the TCE group the iterative level of theory is defined, e.g. BWCCSD or MKCCSD. This implementation was designed for complete model spaces (CMS) which means that the modelspace contains all Slater determinants of all possible (in the context of the spatial and spin symmetry, M_s) distributions of active electrons among active spin orbitals. The user can define the modelspace in two ways. As a first approach the model space can be defined by hand, as shown in the two examples below. The input of the model space starts with the "NREF" keyword followed by the number of reference configurations that will be used, which should equal the number of strings for references below. In the input "2" refers to doubly occupied orbitals, "a" to alpha electrons, "b" to beta electrons and "0" identifies an unoccupied orbital. When the model space is defined by hand the occupation strings have to include the frozen orbitals as well. In the second way the CMS can be generated using the keyword "CAS" followed by the number of active electrons and the number of active orbitals. When using the "CAS" keyword we strongly recommend that users check the references that are generated.

As the model space typically includes multiple configurations it is possible to use the MRCC method to calculate excited states instead of the ground state. For this reason it is required to specify the root of interest. The ROOT keyword followed by the root number specifies the state the code calculates. The lowest root, the ground state, is identified as root 1. If one wants to calculate the third root the keyword ROOT 3 should be used. An example is given below.

echo start tce_mrcc_bwcc memory stack 1000 mb heap 100 mb global 500 mb verify geometry units au H 0.00000000 -2.27289450 -1.58834700 O 0.00000000 0.00000000 -0.01350000 H 0.00000000 2.27289450 -1.58834700 end basis spherical O library cc-pvdz H library cc-pvdz end charge 0 scf rohf singlet thresh 1.0e-10 tol2e 1.0e-10 end tce bwccsd thresh 1.0e-7 targetsym a1 io ga tilesize 18 end mrccdata root 1 nref 4 222220 222202 2222ab 2222ba end task tce energy

echo start tce_mrcc_mkcc memory stack 1000 mb heap 100 mb global 500 mb verify geometry units au H 0.00000000 -2.27289450 -1.58834700 O 0.00000000 0.00000000 -0.01350000 H 0.00000000 2.27289450 -1.58834700 end basis spherical O library cc-pvdz H library cc-pvdz end charge 0 scf rohf singlet thresh 1.0e-10 tol2e 1.0e-10 end tce mkccsd thresh 1.0e-5 targetsym a1 maxiter 100 io ga tilesize 18 end mrccdata root 1 cas 2 2 # Please make sure the references generated are correct. end task tce energy

This version of MRCC works only with GA as specified by the "IO GA" option. In addition this code works only with the spin-orbit 4-index transformation, however in all circumstances an RHF Hartree-Fock initial calculation has to be used. In this implementation the effective Hamiltonian operator contains only scalar, one- and two-body many body components. Finally, in our implementation the BWCCSD methods use the energy threshold for the convergence, whereas the MKCCSD method uses the norm of the residual.

In addition to the iterative single-double calculations the code can calculate non-iterative triples corrections. To request these triples corrections the keyword "SE4T" should be added to the MRCCDATA block. The implementation details and the from of the triples correction are given in equation 20 [ J. Chem. Phys. 137, 094112 (2012)].

echo start tce_mrcc_bwcc memory stack 1000 mb heap 100 mb global 500 mb verify geometry units au H 0.00000000 -2.27289450 -1.58834700 O 0.00000000 0.00000000 -0.01350000 H 0.00000000 2.27289450 -1.58834700 end basis spherical O library cc-pvdz H library cc-pvdz end charge 0 scf rohf singlet thresh 1.0e-10 tol2e 1.0e-10 end tce bwccsd thresh 1.0e-7 targetsym a1 io ga tilesize 18 end mrccdata se4t no_aposteriori root 1 nref 4 222220 222202 2222ab 2222ba end task tce energy

echo start tce_mrcc_mkcc memory stack 1000 mb heap 100 mb global 500 mb verify geometry units au H 0.00000000 -2.27289450 -1.58834700 O 0.00000000 0.00000000 -0.01350000 H 0.00000000 2.27289450 -1.58834700 end basis spherical O library cc-pvdz H library cc-pvdz end charge 0 scf rohf singlet thresh 1.0e-10 tol2e 1.0e-10 end tce mkccsd thresh 1.0e-5 targetsym a1 io ga tilesize 18 maxiter 100 end mrccdata se4t root 1 nref 4 222220 222202 2222ab 2222ba end task tce energy

The current version of the MRCC codes contains also a pilot implementation of the reference-level-parallelism based on the use of processor groups for BWCCSD and BWCCSD(T) approaches. The main ideas of this approach have been described in

- J.Brabec, J. Pittner, H.J.J. van Dam, E. Apra, K. Kowalski, J. Chem. Theory Comput. 8, 487 (2012).

- K. Bhaskaran-Nair, J. Brabec, E. Apra, H.J.J. van Dam. J. Pittner, K. Kowalski, J. Chem. Phys. 137, 094112 (2012).

Two essential keywords have to be added to the "mrccdata" block of the input:

subgroupsize n improvetiling

and

diis 0

in tce block. The "subgroupsize n" defines the size of the subgroup and improvetiling refers to the data representation in the MRCC subgroup algorithm. For example, if user has 4 references and total 32 cores/CPU then n should be defined as 32/4=8. If user has 10 references and 1200 cores/CPU available then the size of the subgroupsize (n) is 120.

echo start tce_mrcc_bwcc_subgroups memory stack 1000 mb heap 100 mb global 500 mb verify geometry units au H 0.00000000 -2.27289450 -1.58834700 O 0.00000000 0.00000000 -0.01350000 H 0.00000000 2.27289450 -1.58834700 end basis spherical O library cc-pvdz H library cc-pvdz end charge 0 scf rohf singlet thresh 1e-12 tol2e 1e-12 end tce bwccsd targetsym a1 io ga diis 0 thresh 1e-7 tilesize 18 end mrccdata subgroupsize 2 # Please read the documentation below. improvetiling root 1 cas 2 2 end task tce energy

CAUTION: Before using the subgroup-based algorithm the users should perform the GA subgroup test in nwchem/src/tools/ga-5-2/global/testing/pgtest.x and pg2test.x in the same location. Additionally it is strongly encouraged to run the NWChem QA tests from the /nwchem/QA/tests/tce_mrcc_bwcc_subgroups directory with various combinations of subgroup size and total number of CPU.

Electron affinity, ionization potential EOMCCSD methods

The EA/IP-EOMCCSD methodologies are available in the 6.5 NWChem release. These implementation are available for the RHF type of the reference function. To enable the compilation of the EA/IP-EOMCCSD codes one has to set the following variable before the compilation of NWChem

export EACCSD=y export IPCCSD=y

Two input examples for the EA/IP-EOMCCSD calculations are shown below.

- EA-EOMCCSD calculations for the ozone molecule

start tce_eaccsd_ozone title "tce_eaccsd_ozone" echo

memory stack 1000 mb heap 200 mb global 500 mb

geometry units bohr symmetry c1 O 0.0000000000 0.0000000000 0.0000000000 O 0.0000000000 -2.0473224350 -1.2595211660 O 0.0000000000 2.0473224350 -1.2595211660 end basis spherical * library cc-pvdz end

scf thresh 1.0e-10 tol2e 1.0e-10 singlet rhf end

tce eaccsd nroots 2 freeze atomic tilesize 20 thresh 1.0d-6 end

task tce energy

- IP-EOMCCSD calculations for the F2 molecule

start tce_ipccsd_f2 title "tce_ipccsd_f2" echo

memory stack 1000 mb heap 200 mb global 500 mb

geometry units angstroms symmetry c1 F 0.0000000000 0.0000000000 0.7059650 F 0.0000000000 0.0000000000 -0.7059650 end

basis spherical * library cc-pvdz end

scf thresh 1.0e-10 tol2e 1.0e-10 singlet rhf end

tce ipccsd nroots 1 freeze atomic thresh 1.0e-7 end

task tce energy

As in the EOMCCSD input we can request any number of roots.

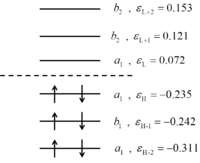

In analogy to the EOMCC calculations we can customize the number of initial guesses by using "set tce:maxeorb" directive. For example for system with the C2V symmetry with the orbital energy structure shown below

one can use the energy window (in the sense of the absolute value of the HF orbital energies) to pinpoint the initial guesses. If one is interested in calculating one EA-EOMCCSD root of the a1 symmetry the

set tce:maxeorb 0.1

should be used. This means that the number of starting vectors will be equal to the number of the unoccupied a1 symmetry orbitals with the corresponding orbital energies less than 0.1 (in our example there will be only one such a vector corresponding to the unoccupied orbital energy 0.072). If one looks for two roots

set tce:maxeorb 0.16

option should be used(there are two a1 unoccupied orbitals with energies less than 0.16).

For the IP-EOMCCSD case the "set tce:maxeorb" option works in a similar way. For example if one is looks for 1 IP-EOMCCSD root of a1 symmetry ,

set tce:maxeorb 0.24

directive should be used (there is only one occupied orbital of a1 symmetry with the absolute value of orbital energy less than 0.24), etc.

THRESH -- the convergence threshold of iterative solutions of amplitude equations

This keyword specifies the convergence threshold of iterative solutions of amplitude equations, and applies to all of the CI, CC, and MBPT models. The threshold refers to the norm of residual, namely, the deviation from the amplitude equations. The default value is 1e-6.

MAXITER -- the maximum number of iterations

It sets the maximum allowed number iterations for the iterative solutions of amplitude equations. The default value is 100.

IO -- parallel I/O scheme

There are five parallel I/O schemes implemented for all the models, which need to be wisely chosen for a particular problem and computer architecture.

- fortran : Fortran77 direct access,

- eaf : Exclusive Access File library,

- ga : Fully incore, Global Array virtual file,

- sf : Shared File library,

- replicated : Semi-replicated file on distributed file system with EAF library.

- dra : Distributed file on distributed file system with DRA library.

- ga_eaf : Semi-replicated file on distributed file system with EAF library. GA is used to speedup the file reconciliation.

The GA algorithm, which is default, stores all input (integrals and excitation amplitudes), output (residuals), and intermediate tensors in the shared memory area across all nodes by virtue of GA library. This fully incore algorithm replaces disk I/O by inter-process communications. This is a recommended algorithm whenever feasible. Note that the memory management through runtime orbital range tiling described above applies to local (unshared) memory of each node, which may be separately allocated from the shared memory space for GA. So when there is not enough shared memory space (either physically or due to software limitations, in particular, shmmax setting), the GA algorithm can crash due to an out-of-memory error. The replicated scheme is the currently the only disk-based algorithm for a genuinely distributed file system. This means that each node keeps an identical copy of input tensors and it holds non-identical overlapping segments of intermediate and output tensors in its local disk. Whenever data coherency is required, a file reconcilation process will take place to make the intermediate and output data identical throughout the nodes. This algorithm, while requiring redundant data space on local disk, performs reasonably efficiently in parallel. For sequential execution, this reduces to the EAF scheme. For a global file system, the SF scheme is recommended. This together with the Fortran77 direct access scheme does not usually exhibit scalability unless shared files on the global file system also share the same I/O buffer. For sequential executions, the SF, EAF, and replicated schemes are interchangeable, while the Fortran77 scheme is appreciably slower.

Two new I/O algorithms dra and ga_eaf combines GA and DRA or EAF based replicated algorithm. In the former, arrays that are not active (e.g., prior T amplitudes used in DIIS or EOM-CC trial vectors) in GA algorithm will be moved to DRA. In the latter, the intermediates that are formed by tensor contractions are initially stored in GA, thereby avoiding the need to accumulate the fragments of the intermediate scattered in EAFs in the original EAF algorithm. Once the intermediate is formed completely, then it will be replicated as EAFs.

The spin-free 4-index transformation algorithms are exclusively compatible with the GA I/O scheme, although out-of-core algorithms for the 4-index transformation are accessible using the 2emet options. See Alternative storage of two-electron integrals for details.

DIIS -- the convergence acceleration

It sets the number iterations in which a DIIS extrapolation is performed to accelerate the convergence of excitation amplitudes. The default value is 5, which means in every five iteration, one DIIS extrapolation is performed (and in the rest of the iterations, Jacobi rotation is used). When zero or negative value is specified, the DIIS is turned off. It is not recommended to perform DIIS every iteration, whereas setting a large value for this parameter necessitates a large memory (disk) space to keep the excitation amplitudes of previous iterations. In 5.0 version we significantly improved the DIIS solver by re-organizing the itrative process and by introducing the level shift option (lshift) that enable to increase small orbital energy differences used in calculating the up-dates for cluster amplitudes. Typical values for lshift oscillates between 0.3 and 0.5 for CC calculations for ground states of multi-configurational character. Otherwise, the value of lshift is by default set equal to 0.

FREEZE -- the frozen core/virtual approximation

Some of the lowest-lying core orbitals and/or some of the highest-lying virtual orbitals may be excluded in the calculations by this keyword (this does not affect the ground state HF or DFT calculation). No orbitals are frozen by default. To exclude the atom-like core regions altogether, one may request

FREEZE atomic

To specify the number of lowest-lying occupied orbitals be excluded, one may use

FREEZE 10

which causes 10 lowest-lying occupied orbitals excluded. This is equivalent to writing

FREEZE core 10

To freeze the highest virtual orbitals, use the virtual keyword. For instance, to freeze the top 5 virtuals

FREEZE virtual 5

NROOTS -- the number of excited states

One can specify the number of excited state roots to be determined. The default value is 1. It is advised that the users request several more roots than actually needed, since owing to the nature of the trial vector algorithm, some low-lying roots can be missed when they do not have sufficient overlap with the initial guess vectors.

TARGET and TARGETSYM -- the target root and its symmetry

At the moment, the first and second geometrical derivatives of excitation energies that are needed in force, geometry, and frequency calculations are obtained by numerical differentiation. These keywords may be used to specify which excited state root is being used for the geometrical derivative calculation. For instance, when TARGET 3 and TARGETSYM a1g are included in the input block, the total energy (ground state energy plus excitation energy) of the third lowest excited state root (excluding the ground state) transforming as the irreducible representation a1g will be passed to the module which performs the derivative calculations. The default values of these keywords are 1 and none, respectively.

The keyword TARGETSYM is essential in excited state geometry optimization, since it is very common that the order of excited states changes due to the geometry changes in the course of optimization. Without specifying the TARGETSYM, the optimizer could (and would likely) be optimizing the geometry of an excited state that is different from the one the user had intended to optimize at the starting geometry. On the other hand, in the frequency calculations, TARGETSYM must be none, since the finite displacements given in the course of frequency calculations will lift the spatial symmetry of the equilibrium geometry. When these finite displacements can alter the order of excited states including the target state, the frequency calculation is not be feasible.

SYMMETRY -- restricting the excited state symmetry

By adding this keyword to the input block, the user can request the module to seek just the roots of the specified irreducible representation as TARGETSYM. By default, this option is not set. TARGETSYM must be specified when SYMMETRY is invoked.

EOMSOL -- alternative solver for the right EOMCCSD eigenvalue problem

The EOMSOL enables the user to switch between two algorithms for solving EOMCCSD eigenproblem. When EOMSOL is set equal to 1 ("eomsol 1" directive in the tce group) the old solver is invoked. The advantage of this solver is a possibility of finding states of complicated configurational structure, for example doubly excited states. However, the dimension of the iterative space increases with each iteration and in effect this algorithm requires large memory allocations especially for large systems. In order to address this bottleneck, new algorithm ("eomsol 2" directive in the tce group) was designed. In EOMSOL 2 algorithm all iterations are split into microcycles corresponding to diis microiterations (the use of "diis" parameter is discussed earlier). This algorithm enables the user to precisely estimate the memory usage in the EOMCCSD calculations, which is equal to diis*nroots*(size_x1+size_x2), where diis is the length of the DIIS cycle, nroots is the number of sought roots, size_x1 corresponds to the size of GA storing singly excited EOMCC almplitudes, and size_x2 is the size of GA with doubly excited EOMCC amplitudes. Generally, larger values of diis parameter lead to a faster convergence, however, this happens at the expense of larger memory requirements. It is recommended not to use in the EOMCCSD calculations with "eomsol 2" diis parameter smaller than 5, which is its default value. The EOMSOL 2 algorithm uses the CIS vectors as initial guesses, and for this reason is suited mainly to track singly excited states. By default, the EOMSOL 1 option is called in the EOMCCSD calculations. It should be also stressed that all iterative EOMCC methods with higher than double excitations use EOMSOL 1 approach.

In some situations it is convenient to use separate convergence threshold for the CCSD and EOMCCSD solvers. This can be achieved by setting proper environmetal variables. In the following example

geometry/basis set specifications tce thresh 1.0d-6 ccsd nroots 2 end set tce:thresheom 1.0d-4 task tce energy

the CCSD equations will be converged to the 1.0d-6 threshold while the EOMCCSD ones to 1.0d-4. This option shoul dbe used with the "eomsol 2" option. In some situations finding several (n) roots to the EOMCCSD equations can be quite challenging. To by-pass this problem one can use the "n+1" model, i.e., we request another root to be converged. Usually, the presence the "buffer" root can imporve the iterative process for n roots of interest. However, the buffer root does not have to be converged to the same accuracy as n roots of interest. The follwing example, shows how to handle this process (we chose n=2, n+1=3):

geometry/basis set specifications tce freeze core ccsd nroots 3 thresh 1.0d-6 end set tce:thresheom 1.0d-4 set tce:threshl 1.0d-3 task tce energy

In this example the CCSD equations are solved with the 1.0d-6 threshold, the first n (2) EOMCCSD roots are determined with the 10d-4 accuracy, while the buffer root is determined with relax conv. criterion 1.0d-3.

2EORB -- alternative storage of two-electron integrals

In the 5.0 version a new option has been added in order to provide more economical way of storing two-electron integrals used in CC calculations based on the RHF and ROHF references. The 2EORB keyword can be used for all CC methods except for those using an active-space (CCSDt). All two-electron integrals are transformed and subsequently stored in a way which is compatible with assumed tiling scheme. The transformation from orbital to spinorbital form of the two-electron integrals is performed on-the-fly during execution of the CC module. This option, although slower, allows to significantly reduce the memory requirements needed by the first half of 4-index transformation and final file with fully transformed two-electron integrals. Savings in the memory requirements on the order of magnitude (and more) have been observed for large-scale open-shell calculations.

2EMET -- alternative storage of two-electron integrals

Several new computation-intensive algorithms has been added with the purpose of improving scalability and overcoming local memory bottleneck of the 5.0 2EORB 4-index transformation. In order to give the user a full control over this part of the TCE code several keywords were designed to define the most vital parameters that determine the perfromance of 4-index transformation. All new keywords must be used with the 2EORB keyword. The 2emet keyword (default value 1 or 2emet 1, refers to the older 4-index transformation), defines the algorithm to be used. By putting 2emet 2 the TCE code will execute the algoritm based on the two step procedure with two intermediate files. In some instances this algorithm is characterized by better timings compared to algorithms 3 and 4, although it is more memory demanding. In contrast to algorithms nr 1,3, and 4 this approach can make use of a disk to store intermediate files. For this purpose one should use the keyword idiskx (idiskx 0 causes that all intermediate files are stored on global arrays, while idiskx 1 tells the code to use a disk to store intermediates; default value of idiskx is equal 0). Algorithm nr 3 (2emet 3) uses only one intermediate file whereas algorithm nr 4 (2emet 4) is a version of algorithm 3 with the option of reducing the memory requirements. For example, by using new keyword split 4 we will reduce the size of only intermediate file by factor of 4 (the split keyword can be only used in the context of algorithm nr 4). All new algorithms (i.e. 2emet 2+) use the attilesize setting to define the size of the atomic tile. By default attilesize is set equal 30. For larger systems the use of larger values of attilesize is recommended (typically between 40-60).

Additional algorithms are numbered 5, 6 and 9. Other values of 2emet are not supported and refer to methods which do not function properly. Algorithms 5 and 6 were written as out-of-core N5 methods (idiskx 1) and are the most efficient algorithms at the present time. The corresponding in-core variants (idiskx 0) are available but require excessive memory with respect to the methods discussed above, although they may be faster if sufficient memory is available (to get enough memory often requires excessive nodes, which decreases performance in the later stages of the calculation). The difference between 5 and 6 is that 5 writes to a single file (SF or GA) while 6 uses multiple files. For smaller calculations, particularly single-node jobs, 5 is faster than 6, but for more than a handful of processors, algorithm 6 should be used. The perforance discrepancy depends on the hardware used but in algorithm eliminates simulataneous disk access on parallel file systems or memory mutexes for the in-core case. For NFS filesystems attached to parallel clusters, no performance differences have been observed, but for Lustre and PVFS they are signficant. Using algorithm 5 for large parallel file systems will make the file system inaccessible to other users, invoking the wrath of system administrators.

Algorithm 9 is an out-of-core solution to the memory bottleneck of the 2-e integrals. In this approach, the intermediates of the 4-index transformation as well as the MO integrals are stored in an SF file. As before, this requires a shared file system. Because algorithm 9 is based upon algorithm 5, described above, it is not expected to scale. The primary purpose of algorithm 9 is to make the performance of the NWChem coupled-cluster codes competive with fast serial codes on workstations. It succeeds in this purpose when corresponding functionality is compared. A more scalable version of this algorithm is possible, but the utility is limited since large parallel computers do not permit the wall times necessary to use an out-of-core method, which is necessarily slower than the in-core variant. An extensible solution to these issues using complex heterogeneous I/O is in development. Restarting with algorithm 9 is not supported and attempting to use this feature with the present version may produce meaningless results.

New is the inclusion of multiple 2emet options for the spin-orbital transformations, which are the default when 2eorb is not set and are mandatory for UHF and KS references. The are currently three algorithms 1, 2 and 3 available. The numbering scheme does not correspond in any way to the numbering scheme for the 2eorb case, except that 2emet 1 corresponds to the default algorithm present in previous releases, which uses the user-defined I/O scheme. Algorithm 2 (2emet 2) writes an SF file for the half-transformed integrals, which is at least an order-of-magnitude larger than the fully-transformed integrals, but stores the fully-transformed integrals in core. Thus, once the 4-index transformation is complete, this algorithm will perform exactly as when algorithm 1 is used. Unfortuntely, the spin-orbital 2-e fully-transformed integrals are still quite large and an algorithm corresponding to 2eorb/2emet=9 is available with 2emet 3. Algorithm 3 is also limited in its scalability, but it permits relatively large UHF-based calculations using single workstations for patient users.

In cases where the user has access to both shared and local filesystems for parallel calculations, the permanent_dir setting refers to the location of SF files. The file system for scratch_dir will not be used for any of the 4-index transformation algorithms which are compatible with io=ga.

Algorithms 13 and 14 are the N5 variants of algorithms 3 and 4. They are the most efficient in core GA-based algorithms for the RHF and ROHF reference functions. Again, two parameters are needed to define the perfromance of these algorithms: tilesize and attilesize. By default attilesize is set equal to 40. In all runs tilesize is required to be less than attilesize (tilesize < attilesize).

New 4-index transformation for RHF/ROHF references (2emet 15) is available in NWChem 6.5. In contrast to algorithms 13 and 14 inter-processor communication is significantly reduced resulting in much better performance.

In the later part of this manual several examples illustrate the use of the newly introduced keywords.

CCSD(T)/CR-EOMCCSD(T) calculations with large tiles

In 6.5 version of NWChem we have enabled versions of the CCSD(T) and CR-EOMCCSD(T) codes, which by-pass the local memory limitation of previous implementations. For this purpose a sliced versions of the CCSD(T)/CR-EOMCCSD(T) codes have been developed (see K. Kowalski, S. Krishnamoorthy, R. Olson, V. Tipparaju, E. Apra, Supercomputing 2011, Seattle). In order to enable these versions it is enough to add

set tce:xmem 100

which defines maximum memory size (in MB) for the slice of 6-dimensional tensors (in the current example 100 MB; for more details see QA tests tce_ccsd_t_xmem and tce_cr_eomccsd_t_xmem).

DIPOLE -- the ground- and excited-state dipole moments

When this is set, the ground-state CC calculation will enter another round of iterative step for the so-called $\Lambda$ equation to obtain the one-particle density matrix and dipole moments. Likewise, for excited-states (EOM-CC), the transition moments and dipole moments will be computed when (and only when) this option is set. In the latter case, EOM-CC left hand side solutions will be sought incurring approximately three times the computational cost of excitation energies alone (note that the EOM-CC effective Hamiltonian is not Hermitian and has distinct left and right eigenvectors).

(NO)FOCK -- (not) recompute Fock matrix

The default is FOCK meaning that the Fock matrix will be reconstructed (as opposed to using the orbital energies as the diagonal part of Fock). This is essential in getting correct correlation energies with ROHF or DFT reference wave functions. However, currently, this module cannot reconstruct the Fock matrix when one-component relativistic effects are operative. So when a user wishes to run TCE's correlation methods with DK or other relativistic reference, NOFOCK must be set and orbital energies must be used for the Fock matrix.

PRINT -- the verbosity

This keyword changes the level of output verbosity. One may also request some particular items in the table below.

| Item | Print Level | Description |

| "time" | vary | CPU and wall times |

| "tile" | vary | Orbital range tiling information |

| "t1" | debug | T1 excitation amplitude dumping |

| "t2" | debug | T2 excitation amplitude dumping |

| "t3" | debug | T3 excitation amplitude dumping |

| "t4" | debug | T4 excitation amplitude dumping |

| "general information" | default | General information |

| "correlation information" | default | TCE information |

| "mbpt2" | debug | Caonical HF MBPT2 test |

| "get_block" | debug | I/O information |

| "put_block" | debug | I/O information |

| "add_block" | debug | I/O information |

| "files" | debug | File information |

| "offset" | debug | File offset information |

| "ao1e" | debug | AO one-electron integral evaluation |

| "ao2e" | debug | AO two-electron integral evaluation |

| "mo1e" | debug | One-electron integral transformation |

| "mo2e" | debug | Two-electron integral transformation |

Sample input

The following is a sample input for a ROHF-UCCSD energy calculation of a water radical cation.

START h2o TITLE "ROHF-UCCSD/cc-pVTZ H2O" CHARGE 1 GEOMETRY O 0.00000000 0.00000000 0.12982363 H 0.75933475 0.00000000 -0.46621158 H -0.75933475 0.00000000 -0.46621158 END BASIS * library cc-pVTZ END SCF ROHF DOUBLET THRESH 1.0e-10 TOL2E 1.0e-10 END TCE CCSD END TASK TCE ENERGY

The same result can be obtained by the following input:

START h2o TITLE "ROHF-UCCSD/cc-pVTZ H2O" CHARGE 1 GEOMETRY O 0.00000000 0.00000000 0.12982363 H 0.75933475 0.00000000 -0.46621158 H -0.75933475 0.00000000 -0.46621158 END BASIS * library cc-pVTZ END SCF ROHF DOUBLET THRESH 1.0e-10 TOL2E 1.0e-10 END TASK UCCSD ENERGY

EOMCCSD calculations with EOMSOL 2 algorithm. In these claculations the diis value of 8 will be used both in the CCSD and EOMCCSD iterations.

TITLE "tce_eomccsd_eomsol2" ECHO START tce_eomccsd_eomsol2 GEOMETRY UNITS ANGSTROM N .034130 -.986909 .000000 N -1.173397 .981920 .000000 C -1.218805 -.408164 .000000 C -.007302 1.702153 .000000 C 1.196200 1.107045 .000000 C 1.289085 -.345905 .000000 O 2.310232 -.996874 .000000 O -2.257041 -1.026495 .000000 H .049329 -1.997961 .000000 H -2.070598 1.437050 .000000 H -.125651 2.776484 .000000 H 2.111671 1.674079 .000000 END BASIS * library 6-31G END SCF THRESH 1.0e-10 TOL2E 1.0e-10 SINGLET RHF END TCE FREEZE ATOMIC CREOMSD(T) EOMSOL 2 DIIS 8 TILESIZE 15 THRESH 1.0d-5 2EORB 2EMET 13 NROOTS 1 END TASK TCE ENERGY

EOM-CCSDT calculation for excitation energies, excited-state dipole, and transition moments.

START tce_h2o_eomcc GEOMETRY UNITS BOHR H 1.474611052297904 0.000000000000000 0.863401706825835 O 0.000000000000000 0.000000000000000 -0.215850436155089 H -1.474611052297904 0.000000000000000 0.863401706825835 END BASIS * library sto-3g END SCF SINGLET RHF END TCE CCSDT DIPOLE FREEZE CORE ATOMIC NROOTS 1 END TASK TCE ENERGY

Active-space CCSDt/EOMCCSDt calculations (version I) of several excited states of the Be3 molecule. Three highest-lying occupied α and β orbitals (active_oa and active_ob) and nine lowest-lying unoccupied α and β orbitals (active_va and active_vb) define the active space.

START TCE_ACTIVE_CCSDT ECHO GEOMETRY UNITS ANGSTROM SYMMETRY C2V BE 0.00 0.00 0.00 BE 0.00 1.137090 -1.96949 end BASIS spherical # --- DEFINE YOUR BASIS SET --- END SCF THRESH 1.0e-10 TOL2E 1.0e-10 SINGLET RHF END TCE FREEZE ATOMIC CCSDTA TILESIZE 15 THRESH 1.0d-5 ACTIVE_OA 3 ACTIVE_OB 3 ACTIVE_VA 9 ACTIVE_VB 9 T3A_LVL 1 NROOTS 2 END TASK TCE ENERGY

Completely renormalized EOMCCSD(T) (CR-EOMCCSD(T)) calculations for the ozone molecule as described by the POL1 basis set. The CREOMSD(T) directive automatically initialize three-step procedure: (1) CCSD calculations; (2) EOMCCSD calculations; (3) non-iterative CR-EOMCCSD(T) corrections.

START TCE_CR_EOM_T_OZONE

ECHO

GEOMETRY UNITS BOHR

SYMMETRY C2V

O 0.0000000000 0.0000000000 0.0000000000

O 0.0000000000 -2.0473224350 -1.2595211660

O 0.0000000000 2.0473224350 -1.2595211660

END

BASIS SPHERICAL

O S

10662.285000000 0.00079900

1599.709700000 0.00615300

364.725260000 0.03115700

103.651790000 0.11559600

33.905805000 0.30155200

O S

12.287469000 0.44487000

4.756805000 0.24317200

O S

1.004271000 1.00000000

O S

0.300686000 1.00000000

O S

0.090030000 1.00000000

O P

34.856463000 0.01564800

7.843131000 0.09819700

2.306249000 0.30776800

0.723164000 0.49247000

O P

0.214882000 1.00000000

O P

0.063850000 1.00000000

O D

2.306200000 0.20270000

0.723200000 0.57910000

O D

0.214900000 0.78545000

0.063900000 0.53387000

END

SCF

THRESH 1.0e-10

TOL2E 1.0e-10

SINGLET

RHF

END

TCE

FREEZE ATOMIC

CREOMSD(T)

TILESIZE 20

THRESH 1.0d-6

NROOTS 2

END

TASK TCE ENERGY

The input for the active-space CR-EOMCCSD(T) calculations (the uracil molecule in the 6-31G* basis set). In this example, the model space is specified by defining the number of highest occupied orbitals (noact) and the number of lowest unoccupied orbitals (nuact) that will be considered as the active orbitals. In any type of the active-space CR-EOMCCSD(T) calculatoins based on the RHF and ROHF references more efficient versions of the orbital 4-index transformation can be invoked (i.e., 2emet 13 or 2emet 14).

title "uracil-6-31-Gs-act" echo start uracil-6-31-Gs-act memory stack 1000 mb heap 100 mb global 1000 mb noverify geometry units angstrom N .034130 -.986909 .000000 N -1.173397 .981920 .000000 C -1.218805 -.408164 .000000 C -.007302 1.702153 .000000 C 1.196200 1.107045 .000000 C 1.289085 -.345905 .000000 O 2.310232 -.996874 .000000 O -2.257041 -1.026495 .000000 H .049329 -1.997961 .000000 H -2.070598 1.437050 .000000 H -.125651 2.776484 .000000 H 2.111671 1.674079 .000000 end basis cartesian * library 6-31G* end scf thresh 1.0e-10 tol2e 1.0e-10 singlet rhf end tce freeze atomic creom(t)ac oact 21 uact 99 tilesize 15 thresh 1.0d-5 2eorb 2emet 13 nroots 1 symmetry targetsym a' end task tce energy

The active-space in the active-space CR-EOMCCSD(T) calculations can be alternatively specified by defining the energy "window" [emin_act,emax_act]. All orbitals with orbital energies falling into this widnow will considered as active (the active space in the following example is different from the one used in the previous example).

title "uracil-6-31-Gs-act" echo start uracil-6-31-Gs-act memory stack 1000 mb heap 100 mb global 1000 mb noverify geometry units angstrom N .034130 -.986909 .000000 N -1.173397 .981920 .000000 C -1.218805 -.408164 .000000 C -.007302 1.702153 .000000 C 1.196200 1.107045 .000000 C 1.289085 -.345905 .000000 O 2.310232 -.996874 .000000 O -2.257041 -1.026495 .000000 H .049329 -1.997961 .000000 H -2.070598 1.437050 .000000 H -.125651 2.776484 .000000 H 2.111671 1.674079 .000000 end basis cartesian * library 6-31G* end scf thresh 1.0e-10 tol2e 1.0e-10 singlet rhf end tce freeze atomic creom(t)ac emin_act -0.5 emax_act 1.0 tilesize 15 thresh 1.0d-5 2eorbe 2emet 13 nroots 1 symmetry targetsym a' end task tce energy

The LR-CCSD(T) calculations for the glycine molecule in the aug-cc-pVTZ basis set. Option 2EORB is used in order to minimize memory requirements associated with the storage of two-electron integrals.

START TCE_LR_CCSD_T ECHO GEOMETRY UNITS BOHR O -2.8770919486 1.5073755650 0.3989960497 C -0.9993929716 0.2223265108 -0.0939400216 C 1.6330980507 1.1263991128 -0.7236778647 O -1.3167079358 -2.3304840070 -0.1955378962 N 3.5887721300 -0.1900460352 0.6355723246 H 1.7384347574 3.1922914768 -0.2011420479 H 1.8051078216 0.9725472539 -2.8503867814 H 3.3674278149 -2.0653924379 0.5211399625 H 5.2887327108 0.3011058554 -0.0285088728 H -3.0501350657 -2.7557071585 0.2342441831 END BASIS * library aug-cc-pVTZ END SCF THRESH 1.0e-10 TOL2E 1.0e-10 SINGLET RHF END TCE FREEZE ATOMIC 2EORB TILESIZE 15 LR-CCSD(T) THRESH 1.0d-7 END TASK TCE ENERGY

The CCSD calculations for the triplet state of the C20 molecule. New algorithms for 4-index tranformation are used.

title "c20_cage" echo start c20_cage memory stack 2320 mb heap 180 mb global 2000 mb noverify geometry print xyz units bohr symmetry c2 C -0.761732 -1.112760 3.451966 C 0.761732 1.112760 3.451966 C 0.543308 -3.054565 2.168328 C -0.543308 3.054565 2.168328 C 3.190553 0.632819 2.242986 C -3.190553 -0.632819 2.242986 C 2.896910 -1.982251 1.260270 C -2.896910 1.982251 1.260270 C -0.951060 -3.770169 0.026589 C 0.951060 3.770169 0.026589 C 3.113776 2.128908 0.076756 C -3.113776 -2.128908 0.076756 C 3.012003 -2.087494 -1.347695 C -3.012003 2.087494 -1.347695 C 0.535910 -2.990532 -2.103427 C -0.535910 2.990532 -2.103427 C 3.334106 0.574125 -2.322563 C -3.334106 -0.574125 -2.322563 C -0.764522 -1.081362 -3.453211 C 0.764522 1.081362 -3.453211 end basis spherical * library cc-pvtz end scf triplet rohf thresh 1.e-8 maxiter 200 end tce ccsd maxiter 60 diis 5 thresh 1.e-6 2eorb 2emet 3 attilesize 40 tilesize 30 freeze atomic end task tce energy

TCE Response Properties

Introduction

Response properties can be calculated within the TCE. Ground-state dipole polarizabilities can be performed at the CCSD, CCSDT and CCSDTQ levels of theory. Neither CCSDT-LR nor CCSDTQ-LR are compiled by default. Like the rest of the TCE, properties can be calculated with RHF, UHF, ROHF and DFT reference wavefunctions.

Specific details for the implementation of CCSD-LR and CCSDT-LR can be found in the following papers:

- J. R. Hammond, M. Valiev, W. A. deJong and K. Kowalski, J. Phys. Chem. A, 111, 5492 (2007).

- J. R. Hammond, K. Kowalski and W. A. de Jong, J. Chem. Phys., 127, 144105 (2007).

- J. R. Hammond, W. A. de Jong and K. Kowalski , J. Chem. Phys., 128, 224102 (2008).

An appropriate background on coupled-cluster linear response (CC-LR) can be found in the references of those papers.

Performance

The coupled-cluster response codes were generated in the same manner as the rest of the TCE, thus all previous comments on performance apply here as well. The improved offsets available in the CCSD and EOM-CCSD codes is now also available in the CCSD-Λ and CCSD-LR codes. The bottleneck for CCSD-LR is the same as EOM-CCSD, likewise for CCSDT-LR and EOM-CCSDT. The CCSD-LR code has been tested on as many as 1024 processors for systems with more than 2000 spin-orbitals, while the CCSDT-LR code has been run on as many as 1024 processors. The CCSDTQ-LR code is not particularly useful due to the extreme memory requirements of quadruples amplitudes, limited scalability and poor convergence in the CCSDTQ equations in general.

Input

The input commands for TCE response properties exclusively use set directives (see SET) instead of TCE input block keywords. There are currently only three commands available:

set tce:lineresp <logical lineresp default: F> set tce:afreq <double precision afreq(9) default: 0.0> set tce:respaxis <logical respaxis(3) default: T T T>

The boolean variable lineresp invokes the linear response equations for the corresponding coupled-cluster method (only CCSD and CCSDT possess this feature) and evaluates the dipole polarizability. When lineresp is true, the Λ-equations will also be solved, so the dipole moment is also calculated. If no other options are set, the complete dipole polarizability tensor will be calculated at zero frequency (static). Up to nine real frequencies can be set; adding more should not crash the code but it will calculate meaningless quanities. If one desires to calculate more frequencies at one time, merely change the line double precision afreq(9) in $(NWCHEM_TOP)/src/tce/include/tce.fh appropriately and recompile.

The user can choose to calculate response amplitudes only for certain axis, either because of redundancy due to symmetry or because of memory limitations. The boolean vector of length three respaxis is used to determine whether or not a given set of response amplitudes are allocated, solved for, and used in the polarizability tensor evaluation. The logical variables represent the X, Y, Z axes, respectively. If respaxis is set to T F T, for example, the responses with respect to the X and Z dipoles will be calculated, and the four (three unique) tensor components will be evaluated. This feature is also useful for conserving memory. By calculating only one axis at a time, memory requirements can be reduced by $25\%$ or more, depending on the number of DIIS vectors used. Reducing the number of DIIS vectors also reduces the memory requirements.

It is strongly advised that when calculating polarizabilities at high-frequencies, that user set the frequencies in increasing order, preferably starting with zero or other small value. This approach is computationally efficient (the initial guess for subsequent responses is the previously converged value) and mitigates starting from a zero vector for the response amplitudes.

Examples

This example runs in-core on a large workstation.

geometry units angstrom symmetry d2h C 0.000 1.390 0.000 H 0.000 2.470 0.000 C 1.204 0.695 0.000 H 2.139 1.235 0.000 C 0.000 -1.390 0.000 H 0.000 -2.470 0.000 C -1.204 -0.695 0.000 H -2.139 -1.235 0.000 C 1.204 -0.695 0.000 H 2.139 -1.235 0.000 C -1.204 0.695 0.000 H -2.139 1.235 0.000 end basis spherical * library aug-cc-pvdz end tce freeze atomic ccsd io ga 2eorb tilesize 16 end set tce:lineresp T set tce:afreq 0.000 0.072 set tce:respaxis T T T task tce energy

This is a relatively simple example for CCSDT-LR.

geometry units au symmetry c2v H 0 0 0 F 0 0 1.7328795 end basis spherical * library aug-cc-pvdz end tce ccsdt io ga 2eorb end set tce:lineresp T set tce:afreq 0.0 0.1 0.2 0.3 0.4 set tce:respaxis T F T task tce energy

TCE Restart Capability

Overview

Check-pointing and restart are critical for computational chemistry applications of any scale, but particularly those done on supercomputers, or run for an extended period on workstations and clusters. The TCE supports parallel check-pointing and restart using the Shared Files (SF) library in the Global Arrays Tools. The SF library requires that the file system be accessible by every node, so reading and writing restart files can only be performed on a shared file system. For workstations, this condition is trivially met. Restart files must be persistent to be useful, so volatile file systems or those which are periodicly erased should not be used for check-pointing.

Restart is possible for all ground-state amplitudes (T, Λ and T(1)) but not for excited-state amplitudes, as in an EOM-CC calculation. The latter functionality is under development.

Restart capability is useful in the following situations:

- The total run time is limited, as is the case for most supercomputing facilities.

- The system is volatile and jobs die randomly.

- When doing property calculations which require a large number of responses which cannot all be stored in-core simultaneously.

At the present time, restarting the amplitudes during a potential energy surface scan or numerical geometry optmization/frequency calculation is not advised due to the phase issue in the molecular orbital coefficients. If the phase changes, the amplitudes will no longer be a useful guess and may lead to nonsense results. Expert users may be able to use restart when the geometry varies using careful choices in the SCF input by using the "rotate" and "lock" options but this use of restart is not supported.

If SF encounters a failure during restart I/O, the job will fail. The capability to ignore a subset of failures, such as when saving the amplitudes prior to convergence, will be available in the future. This is useful on some large machines when the filesystem is being taxed by another job and may be appear unavailable at the moment a check-point write is attempted.

The performance of SF I/O for restart is excellent and the wall time for reading and writing integrals and amplitudes is negligible, even on a supercomputer (such systems have very fast parallel file systems in most cases). The only platform for which restart may cause I/O problems is BlueGene, due to ratio of compute to I/O nodes (64 on BlueGene/P).

Input

set tce:read_integrals <logical read_integrals default: F F F F F> set tce:read_t <logical read_t default: F F F F> set tce:read_l <logical read_l default: F F F F> set tce:read_tr <logical read_tr default: F F F F> set tce:save_integrals <logical save_integrals default: F F F F F> set tce:save_t <logical save_t default: F F F F> set tce:save_l <logical save_l default: F F F F> set tce:save_tr <logical save_tr default: F F F F> set tce:save_interval <integer save_interval default: 100000>

The boolean variables read_integrals and save_integrals control which integrals are read/saved. The first location is the 1-e integrals, the second is for the 2-e integrals, and the third is for dipole integrals. The fourth and fifth positions are reserved for quadrupole and octupole integrals but this functionality is not available. The read_t, read_l, read_tr, save_t, save_l and save_tr variables control the reading/saving of the T, Λ and T(1) (response) amplitudes. Restart control on the response amplitudes is implicitly controlled by the value of respaxis (see above). Requesting amplitudes that are beyond the scope of a given calculation, such as T3 in a CCSD calculation, does not produce an error as these commands will never be processed.

Attempting to restart with a set of amplitudes without the corresponding integrals is ill-advised, due to the phase issue discussed above. For the same reason, one cannot save a subset of the integrals, so if it is even remotely possible that the dipole moment or polarizabilities will be desired for a given molecule, the dipole integrals should be saved as well. It is possible to save the dipole integrals without setting dipole in the TCE input block; setting save_integrals(3) true is sufficient for this to occur.

The save_interval variable controls the frequency with which amplitudes are saved. By default, the amplitudes are saved only when the iterative process has converged, meaning that if the iterations do not converge in less than the maximum, one must start the calculation again from scratch. The solution is to set save_interval to a smaller value, such as the number of DIIS cycles being used.

The user shall not change the tilesize when reading in saved amplitudes. The results of this are catastrophic and under no circumstance will this lead to physically meaningful results. Restart does not work for 2eorb and 2emet 9; no error will be produced but the results may be meaningless.

Examples

geometry units au symmetry c2v H 0 0 0 F 0 0 1.7328795 end basis spherical * library aug-cc-pvdz end tce ccsdt io ga end set tce:lineresp T set tce:afreq 0.0 0.1 0.2 0.3 0.4 set tce:respaxis T F T task tce energy

Maximizing performance

The following are recommended parameters for getting the best performance and efficiency for common methods on various hardware configurations. The optimal settings are far from extensible and it is extremely important that users take care in how they apply these recommendations. Testing a variety of settings on a simple example is recommended when optimal settings are desired. Nonetheless, a few guiding principles will improve the performance of TCE jobs markedly, including making otherwise impossible jobs possible.

Memory considerations

The default memory settings for NWChem are not optimal for TCE calculations. When 2 GB of memory is available per process, the following settings are close to optimal for CCSD jobs

memory stack 800 mb heap 100 mb global 1000 mb

for property jobs, which require more amplitudes to be stored, it is wise to favor the global allocation

memory stack 500 mb heap 100 mb global 1300 mb

If you get an error for ga_create during the iterative steps, reduce the number of DIIS vectors. If this error occurs during the four-index transformation (after d_v2 filesize appears) you need more GA space, a different 2emet, or more nodes.

The memory requirements for CCSD(T) are quite different because the triples are generated in local memory. The value of tilesize should not be larger than 30 in most cases and one should set something similar to the following

memory stack 1200 mb heap 100 mb global 600 mb

The local memory requires will be tilesizeN where N=4 for CCSD, N=6 for CCSD(T) and CCSDT, and N=8 for CCSDTQ. One should set tilesize to 16 or less for CCSDT and CCSDTQ, although symmetry will affect the local memory use significantly. The local memory usage of the CR-EOMCCSD(T) approach has recently been significantly reduced to the level of the CCSD(T) approach (2*tilesize6).

Using OpenMP in TCE

TCE compute kernels are both floating-point and bandwidth intensive, hence are amenable to multithreading. However, not all TCE kernels support OpenMP, and therefore performance may be limited by Amdahl's law. Furthermore, Global Arrays communication is not yet thread-safe and must be called outside of threaded regions, potentially limiting performance. However, even partial OpenMP is likely to improve performance relative to idle cores, in the case where memory limitations or other considerations (see below for the case of Xeon Phi coprocessors) force the user to run NWChem on a subset of the available CPU cores.

Currently, OpenMP threading is available in the following kernels:

- BLAS (assuming the chosen library supports this).

- CCSD(T) and MRCCSD(T) triples kernels.

The development version of NWChem (post-6.6) supports OpenMP more kernels, including:

- 4- and 6-dimensional tensor transposes.

- Loop-driven CCSD tensor contractions.

In most cases, NWChem runs best on CPU-only systems without OpenMP threads. However, modest OpenMP has been found to improve performance of CCSD(T) jobs. We expect the benefits of OpenMP to be more visible with time, as NWChem achieves more complete coverage with OpenMP and as platforms evolve to have more and more cores per node.

How to run large CCSD/EOMCCSD calculations

When running large CCSD or EOMCCSD calculations for large systems (number of orbitals larger than 400) and using large number of cores it is recommended to switch to workflow based implementation of CCSD/EOMCCSD methods.

The original CCSD/EOMCCSD TCE implementations are aggregates of a large number of subroutines, which calculate either recursive intermediates or contributions to residual vector. The dimensionalities of the tensors involved in a given subroutine greatly impact the memory, computation, and communication characteristics of each subroutine, which can lead to pronounced problems with load balancing. For example, for the most computationally intensive part of the CCSD/EOMCCSD approaches associated with the inclusion of 4-particle integrals, the corresponding task pool (the number of tasks in a subroutine) can easily be 2 orders of magnitude larger than the task pool for subroutines calculating one-body intermediates. To address this problem and improve the scalability of the CCSD/EOMCCSD implementations, we exploited the dependencies exposed between the task pools into classes (C) characterized by a collective task pool. This was done in such a way as to ensure sufficient parallelism in each class while minimizing the total number of such classes. This procedure enabled us to reduce the number of synchronization steps from nearly 80, in the EOMCCSD case, down to 4. Optimized versions of the CCSD/EOMCCSD codes are enabled once the

set tce:nts T

directive is used in the input file. Compared to the original CCSD/EOMCCSD implementations the new approaches requires more global memory. The new CCSD/EOMCCSD implementations provides significant improvements in the parallel performance and average time per iteration.

References:

H.S. Hu, K. Bhaskaran-Nair, E. Apra, N. Govind, K. Kowalski, J. Phys. Chem. A 118, 9087 (2014).

K. Kowalski, S. Krishnamoorthy, R.M. Olson, V. Tipparaju, E. Apra, K. Kowalski, High Performance Computing, Networking, Storage and Analysis (SC), 2011 International Conference 1 (2011).

SCF options

For parallel jobs on clusters with poor disk performance on the filesystem used for scratch_dir, it is a good idea to disable disk IO during the SCF stage of the calculation. This is done by adding semidirect memsize N filesize 0, where N is 80% of the stack memory divided by 8, as the value in this directive is the number of dwords, rather than bytes. With these settings, if the aggregate memory is sufficient to store the integrals, the SCF performance will be excellent, and it will be better than if direct is set in the SCF input block. If scratch_dir is set to a local disk, then one should use as much disk as is permissible, controlled by the value of filesize. On many high-performance computers, filling up the local scratch disk will crash the node, so one cannot be careless with these settings. In addition, on many such machines, the shared file system performance is better than that of the local disk (this is true for many NERSC systems).

Convergence criteria

It makes no sense to converge a calculation to a precision not relevant to experiment. However, the relationship between convergence criteria and calculated quantities is not fully known for some properties. For example, the effect of the convergence criteria on the polarizability is significant in some cases. In the case of CN, convergence of 10-11 is necessary to resolve the polarizability tensor components to 10-2. However, for many systems 10-7 convergence is sufficient to get accurate results for all properties. It is important to calibrate the effect of convergence on property calculations, particularly for open-shell and post-CCSD methods, on a modest basis set before relaxing the convergence criteria too much.

IO schemes and integral transformation algorithms

The effect on memory use of using the 2eorb keyword is huge. However, this option can only be used with IO=GA and an RHF/ROHF reference. There are a number of choices for the integral transformation algorithm when using spin-free integrals. The fastest algorithm is 2EMET=5, but significant disk IO is required for this algorithm. One must set permanent_dir to a fast, shared file system for this algorithm to work. If disk performance is not good, one should use either 2EMET=3 or 2EMET=4 depending on how much memory is available. If one sees a ga_create error with 2EMET=3, then switch to algorithm 4 and add split 8 to the TCE input block.

Using coprocessor architectures

CCSD(T) and MRCCSD(T) implementations for Intel MIC architectures

NWChem 6.5 and 6.6 offer the possibility of using Intel Xeon Phi hardware to perform the most computationally intensive part of the CCSD(T) and MRCCSD(T) (only in NWChem 6.6) calculations (non-iterative triples corrections). The form of input is the same as used in standard TCE CCSD(T) and MRCCSD(T) runs. To enable new implementations please follow compilation directives described below.

Required for compilation: Intel Composer XE version 14.0.3 (or later versions)

Environmental variables required for compilation:

% setenv USE_OPENMP 1 % setenv USE_OFFLOAD 1

When using MKL and Intel Composer XE version 14 (or later versions), please use the following settings

% setenv BLASOPT "-mkl -openmp -lpthread -lm" % setenv SCALAPACK "-mkl -openmp -lmkl_scalapack_ilp64 -lmkl_blacs_intelmpi_ilp64 -lpthread -lm"

The command require for compilation is

$ make FC=ifort